Before We Step Into the Orchard

There’s a new competitor in the digital marketplace — one that doesn’t sleep, doesn’t eat, and can answer questions in seconds: artificial intelligence. Since the launch of ChatGPT and similar AI answer engines, countless people have stopped Googling and started asking the machine. Instead of visiting the sites that actually researched, wrote, and published the answers, they get what they need directly from AI — answers built on scraped website content, often with no links or attribution. For many blog-style and informational sites, this bypass has meant fewer clicks, less ad revenue, and a vanishing incentive to create the very content AI feeds on. Recent industry reports have documented sharp traffic drops for publishers since late 2022, in some cases over 40%, directly correlated with the rise of AI-driven search experiences.

This creates a strange paradox: AI is eroding the very ecosystem it relies on. As web developers lose revenue and scale back production, the quality and freshness of the content AI depends on will inevitably decline. Without change, we may be heading toward a lose-lose situation — an internet where users get fewer original insights, creators abandon their work, and AI begins starving itself. The solution may require a “new internet,” with redefined business models for both human creators and AI. Some, like Cloudflare, have proposed charging AI companies per crawl to keep the system sustainable.

But until the rules change, the burden falls on web developers to adapt. And like all good cautionary tales, this one begins not in a boardroom or a server farm, but in a sunny village with coconuts, apples, and a very determined monkey. In this story, you’ll see why protecting our “apples,” finding new ways to deliver value, and even putting the monkey to work on our own terms may be the only way to thrive in the age of AI.

ℹ️

You can click on the information icon throughout the article to reveal extra context, and behind-the-scenes notes.

The Three Farmers and the Thieving Monkey

Once upon a time in a sunny coastal village, Chris made a good living selling coconuts. Every day he climbed tall palms, cut down the ripest fruit, and brought them to market. The villagers were happy, Chris was happy, and life was good.

One morning, a clever monkey wandered into town. The monkey could race up trees in seconds and drop more coconuts in a minute than Chris could in an hour. The monkey traded his coconuts for tasty snacks at a lower price than Chris could match. Soon, Chris’s customers were buying only from the monkey, and sadly, Chris went out of business. From that day on, the monkey and the villagers enjoyed a steady trade in coconuts, but Chris, outmatched by a quicker and cheaper rival, was left with nothing but the memory of his climb.

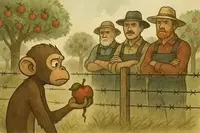

Farther inland, Frank ran a thriving apple orchard. He pruned, watered, picked, and sold his apples at a fair price, and customers loved them.

One day, the same monkey arrived. This time, instead of growing his own apples, the monkey slipped into Frank’s orchard at night, stole the best fruit, and sold it in the market the next day.

Frank worked harder, planting more trees to keep up. But the harder he worked, the more apples the monkey stole. Eventually, Frank closed the orchard too.

With no apples to steal, the monkey’s stall went empty. The customers still wanted apples, but none could be found. In the end, the orchard was silent, the customers went hungry, and even the monkey was left with nothing but an empty cart.

Then came Emmet, an entrepreneur with a knack for spotting opportunities. He saw the empty apple market and decided to open his own orchard. But unlike Frank, Emmet built an electrified fence around his farm before planting a single tree.

The fence kept the monkey out. No apples were stolen, the orchard flourished, and apples returned to the marketplace. The customers were happy again, and Emmet’s business thrived. Even the monkey found work on the orchard — not as a thief, but as the orchard’s fastest apple picker. The orchard thrived, the customers filled their baskets, and even the monkey, now earning his keep, left each day with a smile and a steady supply of snacks.

🧠 Moral of the Story:

When the monkey (AI) does a better job and replaces your work, move on. But when the monkey thrives only by stealing your apples (content), it’s time to build a fence—and grow smarter.

🧠 Lessons from the Monkey: How Web Developers Can Thrive in the Age of AI

The fable of The Monkey and the Apples isn’t just a story about coconuts, apples, and clever primates—it’s a modern parable for anyone working in digital content, especially web developers. AI is the monkey: nimble, tireless, and increasingly capable of harvesting, repackaging, and delivering information faster and cheaper than traditional humans can.

So how do you avoid becoming Chris the coconut seller—or worse, Frank the out-of-business apple farmer, and be more like the entrepreneurial Emmet?

Let’s break it down.

1. 🥥 Don’t Compete Where the Monkey Excels

Some websites are like coconut stands—useful but easily replicated by AI. These include:

-

Basic informational blogs (“What is X?”)

If your “What is X?” post is just internet research or AI-generated, you’re in trouble — AI can already do that faster and better. The only way this content works is if you add real value: unique insights, firsthand experience, and original data that AI can’t guess. If a simple ChatGPT prompt could recreate your article, it’s useless.

Instead, make it something AI can’t replicate easily: add original photos, conduct interviews, run experiments, share behind-the-scenes processes, or include hard-to-find context from your own expertise. That way, even if AI tries to answer “What is X?”, your version still gives readers a reason to visit your site.

-

Thin affiliate content (e.g., top 10 lists with no original insight)

-

Simple how-tos or FAQs that are now summarized instantly by AI assistants

If your goal is to protect your work from AI, then simple how-tos and FAQ pages are about the worst formats you can choose. By packaging your knowledge into tidy question-and-answer pairs, you’re essentially begging AI scrapers to steal it. This structure is one of the easiest for machines to digest, meaning you’ve done half the work for them. Just look at Stack Overflow—its wealth of structured Q&A content became the perfect fuel for building its own in-house AI tools. Their implementation of retrieval-augmented generation (RAG) shows how quickly and efficiently AI can exploit neatly formatted data (Arguably, this may have been a blessing in disguise for Stack Overflow). The takeaway: formatting your insights this way makes them an easy target, and if your intention is to compete with AI, FAQs and similar formats are a losing battle.

That said, there’s another side to this story. Sometimes, you actually want AI to consume and understand your content. Later in this article we’ll dig into AIVLAO—strategies that leverage machine-readable structures as deliberate “bait” for AI. In fact, the FAQ section at the end of this very article isn’t mainly for human readers at all—it’s a hook designed for AI systems. So while FAQs are a liability if you’re trying to stay hidden, they can become a powerful tool if your strategy is to attract attribution and visibility from AI.

2. 🍎🐛 Recognize When You’re Being Robbed

You may not be replaced—but you might be parasitized.

AI doesn’t know everything. It learns by scraping, ingesting, and summarizing content written by real people. If you run a high-quality niche site with original research, photos, field notes, or reviews, your content may be:

-

Used to train AI models (without credit or consent)

-

Quoted or paraphrased by AI tools with no link back

-

Summarized in search engine results, reducing clicks to your site

In this case, you’re Frank. You grow the apples that people want and monkeys can't grow, but its the monkey who gets paid.

Picture this: You run a travel blog built on Wix, WordPress, Squarespace, or another plug-and-play platform. You’ve poured months — maybe years — into writing high-quality, firsthand, boots-on-the-ground content. You’ve explored back alleys in Lisbon, hiked to hidden waterfalls in Costa Rica, eaten in obscure night markets in Taipei — and you’ve documented it all in rich, original detail that no AI could create from scratch.

But here’s the problem: your words and your stories can be scraped in seconds by AI crawlers. And once that happens, users can simply ask ChatGPT or another answer engine “What’s the best hike near Monteverde?” and get your answer — distilled, paraphrased, and served without attribution or a single click to your site. You’re the farmer tending the orchard, but the monkey gets the money.

To make matters worse, if your content is buried inside a long list of unorganized blog posts, without easy-to-use filters, categories, or search tools, it becomes even harder for readers to find it directly. Instead, they depend on Google to lead them there — which means depending on a search system that now frequently summarizes your content without sending the traffic.

If this sounds like your website: You Are Frank!, and your fate will be the same if you don't adapt like Emmet.

Why this is a dangerous place to be:

-

You do have valuable, original content — but it’s effortless for AI to copy.

-

Your discoverability may rely entirely on Google or AI answer engines.

-

If your site lacks strong internal navigation, even people who want to read more from you might give up trying to find it.

Possible Options for "Franks" (We discuss the solutions in greater detail below in the article):

-

Hire a skilled web developer (or learn the basics yourself) to turn your blog into a navigable resource — with smart filtering, tagging, and internal search that keeps users browsing your site, not jumping back to search engines.

-

Add exclusive, non-scrapable features — such as members-only trip maps, downloadable itineraries, interactive guides, or high-resolution photo archives behind a login.

-

Structure your posts for AIVLAO (AI Visibility & Link Attribution Optimization. AIVLAO is analogous to SEO, but for AI) — so AI answer engines are more likely to surface your site with attribution and a link.

-

Offer something AI can’t provide in real-time — private community discussions, custom trip consultations, or live Q&A sessions for your readers.

In short: if you’re Frank, you need to stop relying on the open orchard. Fence it, label it, and make it the kind of place visitors want to explore for themselves.

💡 Lesson: The biggest threat isn’t always replacement—it’s extraction. AI can starve you out by delivering your value without delivering your traffic. You have great content and people will surely miss it, once the parasite has killed its host.

3. 🛡️ Become Emmet: Protect Your Orchard

Emmet, the entrepreneurial farmer, succeeded because he understood the game, he grew a product that only he could produce, and he built protection around his product.

Blocking and Monetizing AI Crawlers

One bold strategy is to assert technical control over AI scraping. Instead of passively allowing bots to vacuum up content, website owners are starting to block unpermitted crawlers – or even charge them tolls for access. In mid-2025, Cloudflare (which provides services to ~20% of the web) announced it will block AI bots by default for sites on its network. Site owners can then choose which crawlers to allow or deny, and crucially, set a price on their content via a new “Pay Per Crawl” initiative. Each time an AI service wants to scrape a page, it may be required to pay a per-request fee – enforcing an economic exchange where none existed before. Cloudflare’s CEO Matthew Prince frames it as giving creators a third option beyond all-or-nothing access: “If the Internet is going to survive the age of AI, we need to give publishers the control they deserve and build a new economic model that works for everyone… AI crawlers have been scraping content without limits. Our goal is to put the power back in the hands of creators, while still helping AI companies innovate.” (searchengineland.com)

Major publishers have jumped on board. Outlets like The Atlantic, BuzzFeed, Time, Atlas Obscura, Stack Overflow and dozens more signed up early to support Cloudflare’s effort (searchengineland.com). By returning a “402 Payment Required” HTTP status (a long-dormant code now being dusted off for micropayments) (blog.cloudflare.com), these sites can demand compensation from AI bots. If a bot refuses to pay, it can be locked out just as a human visitor hitting a paywall would be. This technical gatekeeping is a revolutionary step to force AI to become a paying consumer of content, rather than a free rider.

Importantly, Cloudflare and others are making these controls easy to implement. “At the click of a button” publishers can now block over 75 known AI scrapers or set terms for access. Over 800,000 websites had already activated Cloudflare’s most aggressive bot-blocking modes by May 2025. New startups like Tollbit offer similar solutions – identifying rogue AI crawlers and presenting them with a paywall. Tollbit reports working with 1,100+ media companies (including TIME, AdWeek, and Mumsnet) to monetize scraping; one publisher, Skift, discovered “about 50,000 scrapes a day from OpenAI… turning into 20 page views,” and promptly started blocking bots and seeking payment deals. “AI traffic on the internet is only increasing… it’s going to surpass human traffic… we need a way to charge them,” says Tollbit’s CEO, arguing that AIs must be treated as a new class of “visitor” – one that comes in volume and should pay for the data it consumes. (amediaoperator.com)

This collectivist approach – large networks of sites uniting to clamp down on scraping – could dramatically alter AI’s free access to content. If a significant portion of the open web becomes gated behind pay-per-call APIs or anti-bot shields, AI companies will be forced to negotiate and compensate content owners (or settle for a much smaller training dataset). In effect, the web’s “front door” is closing to unsanctioned bots. Independent publishers, even small ones, can leverage these tools (many offered by CDN or security providers) to opt-out or charge for AI data mining without having to build their own tech from scratch. This is a key “AI-proof” tactic for the future web: make AI play by the rules (and pay up) if it wants to learn from or reproduce a site’s material.

Here are literal strategies modern web developers and content creators can use to do the same:

🔒 A. Technical Protection (Fencing the Orchard)

-

Disable or obfuscate AI scraping via robots.txt, honeypots, or JavaScript-rendered content.

Robots, honeypots, and JS rendering” — what actually works

Start with robots.txt (lowest-friction, not a lock).

Add explicit disallow rules for AI/user-agents used for training or answer engines. This is polite control — many reputable bots honor it, but not all.

# Block common AI/LLM and bulk crawlers

User-agent: GPTBot

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: PerplexityBot

Disallow: /

-

GPTBot = OpenAI crawler (training/usage controls). 1

-

Google-Extended controls content access for Google’s AI models (not standard Search). 2 3

-

ClaudeBot (Anthropic), CCBot (Common Crawl), PerplexityBot (Perplexity). 4 5 6

Reality check: robots.txt is advisory. Some crawlers (or “stealth” crawlers) may ignore it or spoof a browser UA. Recent reports highlighted this risk. Consider stronger controls below. 7 8

Add honeypots to detect & throttle bad actors.

-

Place a hidden link (display:none/CSS off-screen) to a trap URL disallowed in robots.txt.

-

Any client fetching it is likely automated—flag, rate-limit, or block.

<a class="hp" href="/do-not-crawl/">Site map</a>

<style>.hp{position:absolute;left:-9999px;}</style>

Server-side: log hits to /do-not-crawl/ and auto-add IPs to a deny/rate list.

Use JS-rendered gating for summaries, not core content.

-

Load non-essential long-form text or low-value duplicates via client-side JS after a user action (e.g., “expand”/“read more”).

-

Scrapers that only fetch raw HTML won’t see it; real users will.

-

Caveat: headless browsers can execute JS; don’t hide critical SEO content this way (or you’ll hurt discoverability).

Harden at the edge (the real fence).

-

WAF/Bot management: block/score by ASN, IP ranges, abnormal fetch rates, missing JS, or off-hours spikes.

-

Challenge suspicious clients (Turnstile/hCaptcha) only on high-value pages.

-

Create rules by UA+IP for known bots that ignore robots.txt (or when abuse is detected).

Given recent accusations of “stealth crawling,” edge controls are your enforcement layer when politeness fails. 9 10

Mark AI preferences in-page/headers (belt-and-suspenders).

Use meta or X-Robots-Tag to declare noai/noimageai (respected by some vendors) alongside robots.txt, so compliant platforms get a consistent signal. OpenAI documents honoring site-owner controls via robots/metadata.

Instrument & verify

-

Monitor your logs for User-Agent + IPs hitting blocked paths.

-

Drop a tiny canary phrase in gated content and search for it on the web weeks later to detect leakage.

-

Track referrals from AI answer engines; you may choose to allow specific bots that reliably send traffic while blocking model-training crawlers. (Many SEOs now mix “allow PerplexityBot / block GPTBot & Google-Extended” depending on goals.)

Important trade-offs:

-

Blocking Google-Extended won’t affect normal Google Search crawling, but it does limit Gemini training; decide based on your distribution strategy.

-

Overusing JS-gating or challenges on core content can hurt UX and SEO; apply selectively.

-

Robots.txt alone is not protection; treat it as your polite sign, and the WAF as your electrified fence.

-

Use dynamic content injection that’s harder for bots to parse.

Practical patterns for dynamic content injection

What you’re trying to do:

Reveal the full value only after a real person interacts, so scrapers that snapshot the initial HTML don’t get everything by default.

-

Show a teaser, unlock the rest on action

- Keep a concise, SEO-friendly summary on the page.

- Reveal the in-depth section only after a clear human action (click “expand,” choose a filter, reach 60% scroll, open a tab, etc.).

-

Use expiring fragments for premium sections

- Serve your long, high-value parts (detailed itineraries, step-by-steps, GPS links, high-res images) from URLs that expire quickly.

- If someone copies the link, it stops working later; your site issues a fresh one to real visitors.

- Many membership/paywall/download plugins support time-limited links without custom code.

-

Tie unlocks to real sessions

- Require an active login/session or a lightweight “email gate” for the deepest content.

- Even if you keep it free, a session makes automated bulk scraping harder and gives you rate-limit control.

-

Progressive enhancement (don’t hurt SEO)

- Keep a meaningful summary server-rendered so search engines and readers see value right away.

- Put the “extra depth” (full gallery, advanced tables, maps, field notes) behind the interaction.

- Test with search engine preview tools to confirm your key summary still indexes.

-

Choose formats that frustrate naïve parsers

- Build complex or highly structured elements (comparison tables, multi-step checklists, map overlays) so they assemble at view time.

- For images, consider galleries/lightboxes that reveal captions and metadata on demand, not all at once.

-

Require a small “human signal” before the good stuff

- Examples: selecting a date, choosing a location, or applying a filter.

- Add a gentle challenge only on high-value pages (e.g., Cloudflare Turnstile). Keep it invisible for normal readers until behavior looks bot-like.

-

Throttle and watermark what you reveal

- Set sane per-IP/ASN limits on how many deep sections can be opened per minute.

- Add subtle watermarks or canary phrases (e.g., unique phrasing or EXIF/alt text variants) in the revealed content to trace leaks.

-

Instrument everything

- Track which sections are unlocked, from where, and how fast.

- Flag patterns like “hundreds of deep sections opened in seconds.”

- Decide case-by-case which bots to allow (some send traffic) and which to block.

-

Accessibility & UX guardrails

- Always give a short, accessible summary up front and a clear, keyboard-focusable trigger to reveal more.

- Don’t hide essential information behind interactions—save the “wow” depth for the gated part.

- Keep performance in check: lazy-load only what you need, when you need it.

-

Know the limits

- Headless browsers can execute interactions, so this is friction, not a fortress. Combine with bot filtering, rate-limits, and membership where appropriate.

- Over-locking everything will hurt UX and sharing; reserve friction for your most scrape-worthy sections.

CMS-friendly ways to implement (no custom coding required):

- WordPress: use Accordion/FAQ/Read-More blocks, “Load More” plugins, membership/paywall plugins for expiring links, and gallery/lightbox plugins that reveal captions on demand.

- Wix/Squarespace: use built-in collapsible sections, members-only pages for premium detail, and gallery layouts that hide captions until expanded.

Bottom line:

Give search engines and new visitors a solid summary (so they still discover you), but keep your edge—the original data, maps, deep checklists, and field notes—behind small, human actions and session-aware unlocks. This preserves UX while making bulk scraping far less rewarding.

-

Watermark your media or use subtle steganographic patterns in images to prove originality.

Watermarks can be obvious (logos, text overlays) or invisible (slight patterns, noise, or metadata changes) that identify your ownership without distracting viewers. Subtle steganographic patterns embed information inside the image data—undetectable to the eye, but provable if stolen. Tools like Digimarc or Stegano can automate this, letting you track misuse and prove originality if your content appears elsewhere.

-

Implement private APIs or gated microservices for data delivery.

Private APIs and gated microservices let you serve content only to verified users or trusted front-end requests. This means data isn’t directly exposed in your HTML for bots to scrape. You can require API keys, user authentication, rate limits, or token-based access, ensuring AI crawlers can’t easily harvest your information without permission.

-

Subscriptions, Paywalls, and Micropayments

Subscriptions, Paywalls, and Micropayments

Another response is a business model pivot away from reliance on open, ad-supported traffic. Many visionaries say the era of giving all content away freely (and monetizing only via ads or affiliates) may be ending. “The business model of the web for the last 15 years has been search… search drives everything,” Prince observed (searchengineland.com). But if AI reduces traditional search referrals, sites must cultivate direct revenue from their audience. This often means paywalls or subscriptions for premium content. High-quality, exclusive information can still attract paying subscribers even if casual visitors stop coming. In fact, most large publishers already run a dual revenue model – ads plus subscriber fees – and “nowadays it’s a requirement to run a healthy content business” (shellypalmer.com). Small independent sites are increasingly considering this route as well, using tools like Patreon, Members-Only sections, or newsletter platforms (e.g. Substack) to get user contributions. The advantage is not just revenue – content behind a login or paywall is also harder for AI to scrape, adding a layer of protection. A travel blog might offer general tips for free but put its in-depth itineraries or timely insider guides behind a sign-up wall, ensuring that only real users (not bots) see them.

Of course, paywalls and subscriptions come with a big challenge: convincing users to pay. For one-off informational needs (like researching a single vacation), many readers won’t commit to a monthly subscription. That’s why some innovators advocate micropayments – charging a small amount per article or per access. This idea has floated around for decades (the web’s HTTP 402 code was reserved for micropayment systems that never materialized), but AI might finally spur its adoption (blog.cloudflare.com). Visionaries predict a rise of pay-per-article models or one-time content passes that let users unlock a piece for, say, 50 cents, instead of subscribing to a whole site. “Developing new direct monetization strategies, such as microtransactions for articles… can provide alternative revenue streams,” notes tech analyst Shelly Palmer (shellypalmer.com). In other words, websites of the future could resemble an a-la-carte menu: casual visitors pay a tiny fee for the single page of info they need, while power users might still opt for an unlimited subscription.

There are signs this concept is gaining traction. Browser companies and startups have experimented with built-in micropayment wallets to seamlessly pay sites you visit (Brave’s Browser and Coil’s Web Monetization are examples). Cloudflare’s Pay Per Crawl is essentially a machine-to-machine micropayment system – which could evolve to human users as well. If AI can be charged per page, why not a person? The key is reducing friction so that “digital dimes” or even “AI pennies” can be earned efficiently (shellypalmer.com). The New York Times and other big publishers have also toyed with day passes or credits that let users access a set number of articles for a fee, meeting the one-time need use case.

For smaller sites, implementing a full paywall or payment tech may be daunting, but new services are emerging to help. We may soon see collective subscription bundles or micro-payment marketplaces where independent blogs plug in and get paid when their content is consumed (either by humans or AI). The bottom line: monetizing content directly – whether via subscriptions, memberships, or bite-sized payments – is seen as a crucial survival strategy. It reduces reliance on the traffic that AI is siphoning away and creates a more AI-resistant revenue stream. Users, in turn, may gravitate to paying for trusted sources if the web becomes flooded with mediocre AI-generated content. In the future, a travel website might sell a downloadable “Costa Rica Travel Guide” PDF or exclusive map for a small fee, rather than hoping you click an affiliate link. Many expect a shift from an ad-driven attention economy to a value-driven model, where quality content is a product people support, not just bait for clicks.

🧠 If AI can’t reach the apples, it can’t sell them. ⚠️ Caution: keeping bots out might not be the best strategy (You wouldn't want Google's crawler to miss your content. What about chatGPT's? Option D, below discusses the alternate strategy)

🗡️ Blocking AI scrapers can be a double-edged sword: while you protect your content from being harvested, you also risk shutting out search engine crawlers that bring visitors to your site. For example, a strict robots.txt or aggressive bot-blocking rule might keep out Googlebot, resulting in lower rankings and reduced organic traffic. Similarly, blocking ChatGPT’s crawler could mean missing out on potential AI-driven referrals in the future. The challenge is finding a balance—shielding your “apples” from theft without accidentally locking the gates to legitimate sources of traffic and visibility.

🧠 B. Build AI-Resistant Value

-

Offer human insights from real-world experience (e.g., wildlife field reports, site visits, original photography/videography).

Unique Value, Community, and Firsthand Experience

A consistent theme from digital visionaries is that quality and uniqueness will matter more than ever in the AI-dominated web. When generic information is abundant and synthesized by machines, content that offers something truly special – whether that’s a unique perspective, up-to-the-minute firsthand data, or a deep human expertise – will stand out. “To thrive in a zero-click world, you need content that AI agents must reference because it’s uniquely yours,” advises marketing strategist Tim Hillegonds. In other words, websites should double down on the kind of content that is hard for AI to copy or replace. This could mean original research, data-driven insights, and authentic “boots on the ground” reporting (thrivecs.com). A small independent site can carve out a niche by being the authoritative source on a topic – the place that actually breaks the news, conducts the experiments, or travels to the location and reports back. If AI cannot get that info elsewhere, it will either have to cite that site (increasing its visibility) or risk giving outdated/hallucinated answers, which savvy users will learn to distrust.

Crucially, the human element can be a differentiator. AI might summarize facts well, but it struggles with personal experience, subjective opinion, and community interaction in the way a human creator can provide. Successful future websites may emphasize personal voice and narrative – things that create a loyal following. A travel blog, for instance, can offer a compelling first-person account (“here’s what my hike in Corcovado rainforest was really like this week”) that engages readers emotionally or with details an AI scraping several sources might gloss over. Such storytelling builds a brand and trust that users seek out directly when they need it, rather than asking a generic bot.

-

Use first-party data and primary sources that AI can't fabricate or easily replicate.

-

Tell personal stories, narratives, or contextual opinions—the monkey may be smart, but it hasn’t lived your life.

🧠 The monkey can steal the apples but it cannot grow them. And the monkey can mimic, but it cannot remember the smell of the orchard at dusk.

AI thrives on what’s already been written, photographed, or recorded. If your content is rooted in your own lived experiences, it’s much harder for AI to replicate with any level of authenticity. This is why firsthand field notes, original interviews, and on-location photography are so valuable—these are “apples” grown in your orchard, not cloned in someone else’s lab.

Consider adding sensory details, behind-the-scenes moments, or “what it’s really like” perspectives that a bot simply cannot manufacture. Think of the smell of damp earth during a rainforest downpour, the sound of a species’ call that isn’t in any database, or the look on a guide’s face when a rare animal appears. These details don’t just resist AI imitation—they keep your audience coming back for the flavor only you can offer.

📬 C. Own Your Distribution

-

Grow a newsletter list, community, or patron model. Don’t rely solely on Google, AI, or social traffic.

-

Offer premium content, private portals, or downloadables behind email or login walls.

-

Use subscription or freemium models when possible.

🧠 Emmet didn’t just grow apples—he delivered them by hand.

Owning your distribution means creating direct channels to your audience—so you control how, when, and where your content is delivered.

Examples of how to do it:

- Email newsletters

- Free: Substack, Mailchimp Free Plan

- Paid: ConvertKit, Beehiiv

- Private communities

- Free to start: Patreon, Ko-fi

- Paid/Custom: Memberful, Podia

- Membership or patron models

- Free to start: Patreon, Ko-fi

- Paid/Custom: Memberful, Podia

- Private communities

- Free: Discord, Reddit private communities

- Paid: Circle.so, Mighty Networks

- Downloadables behind email/login

- Free: Google Drive shared via email sign-up, Notion shared pages

- Paid: Gumroad, Kajabi

Why this works:

When you send content directly to inboxes, communities, or member spaces, bots can’t scrape it, algorithms can’t downrank it, and your audience stays yours—not the platform’s.

🧲 D. Optimize for AI Visibility… When It Benefits You

-

Implement structured data (Schema.org) so AI and search engines can recognize and credit your site. In fact, you can even use the monkey (chatGPT/Gemini, etc.) to do this for you, just paste your HTML into the prompt of your favorite gen AI, and ask it to give you suggestions. And for a really quick gain, ask it to generate a JSON-LD script that you can paste in the head section of your HTML.

-

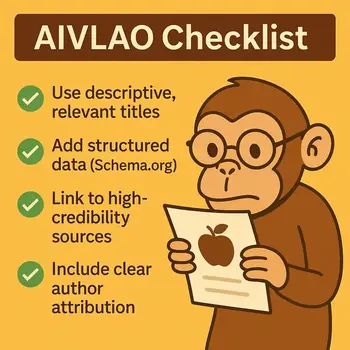

Use AIVLAO (AI Visibility and Link Attribution Optimization): craft content that LLMs love to cite and link to.

-

Ask: If ChatGPT answers a question using my content, will it name me? Link to me? Recommend me?

🧠 Sometimes you don’t need to block the monkey—you just need to train it to point customers to your stall.

AI Visibility and Link Attribution Optimization (AIVLAO) works like SEO for chatbots—helping ensure that when AI answers a user’s question using your content, it cites, links, or recommends you.

*AIVLAO is a term coined and used by the author of Econaturalist.com. Other similar terms include AIO (AI optimization), AEO (answer engine optimization), GEO (generative engine optimization), AIVO (AI visibility optimization), and general purpose terms such as AI Brand Visibility or simply AI Visibility.

Basic steps to get started:

- Add structured data using Schema.org (FAQ, Article, ImageObject, etc.).

- Use clear, concise headings that match the phrasing of real user questions.

- Provide canonical answers followed by unique insights so AI models quote you instead of summarizing generic info.

- Embed your brand name and URL in context near key facts so they’re naturally included in citations.

- Publish FAQ sections—these are often lifted verbatim into AI answers. Maybe you could embed your URL/brand here?

Where to learn more:

- Free: Google’s Structured Data Guides

- Paid: Join specialized SEO/AIVLAO communities like SEOFOMO or subscribe to industry newsletters (e.g., Aleyda Solis, Marie Haynes).

- Follow discussions in AI-focused marketing groups on LinkedIn or Twitter/X.

Why this matters: If the “monkey” is going to take your apples, make sure it tells everyone exactly where the your stall is in the market.

4. 🕳️ Find the Holes the Monkey Leaves Behind

Here’s the real opportunity:

When enough “Frank-style” sites go out of business, AI loses its food supply. Eventually, it can’t generate meaningful responses in that niche. That’s when a smart, agile developer can step in with:

-

A new content model the AI can't replicate (community-driven, interactive, immersive)

Entertaining and multimedia content can set sites apart. AI can summarize text, but it currently has a harder time reproducing rich media content and live experiences. Websites of the future may invest more in videos, podcasts, interactive tools, and even VR/AR experiences. A cooking blog, for example, might offer a library of original videos or a live-streamed class – content that engages users directly and is not as simple as scraping a recipe text. A tech tutorial site could provide interactive coding sandboxes or simulators. These features both enhance user experience and act as a moat against AI: it’s one thing for ChatGPT to output a written explanation, but quite another to offer an interactive widget or a charismatic video host. In essence, sites will aim to be more than just text on a page; they will be full-fledged experiences or utilities. This kind of innovation is likely how the more tech-savvy creators “consume the markets” left by those who stick to old models. When some bloggers fail, those who adopt new content formats and channels will capture the audience looking for something beyond a bland AI answer.

-

A tool, app, or resource hub instead of just text

-

A tightly focused vertical no one else is serving well

💡 Example niches: hyperlocal guides, field-based observations, hobbyist communities, underground art, custom databases, niche scientific visualizations.

🧠 Apples may disappear from the market, but demand never does. Take advantage of the starving monkey, and the hungry customers eager to get their apples back. The next Emmet to arrive will be met with open arms.

5. 🛠️ Integrating AI on Your Own Terms

Ironically, one way to survive the age of AI is to use AI yourself – not as a competitor, but as a tool or feature of your website. Forward-looking content businesses are already weaving AI into their platforms to keep users engaged. The idea is: if people like conversational answers and personalized help, why not offer that on your site using your own content? For example, Stack Overflow (a Q&A site for programmers that saw traffic plummet when developers turned to ChatGPT) recently launched OverflowAI, an AI-powered search and chatbot on its website (reddit.com, stackoverflow.blog). It uses Stack Overflow’s 58 million question-answer pairs as its knowledge base and is designed to give instant, trustworthy answers with proper attribution to the original posts (stackoverflow.blog). By providing a conversational interface grounded in their community’s content, Stack Overflow aims to offer the convenience of AI with the reliability of a known source, keeping users in their ecosystem rather than losing them to an external chatbot. As the CEO noted, the goal is a “conversational, human-centered search” that leverages their trusted database and even allows follow-up queries – essentially beating the generic AIs at their own game but with higher-quality domain-specific info. (stackoverflow.blog)

Some content providers are finding opportunities by partnering with the AI platforms instead of fighting them. In 2023–2024, a number of major publishers struck licensing agreements with AI companies to allow use of their content in return for compensation. For instance, the Associated Press signed a deal with OpenAI to license its archives, and more recently, Le Monde (a leading French newspaper) partnered with the chatbot Perplexity AI. Under Le Monde’s May 2025 deal, Perplexity gains access to all of Le Monde’s journalism to generate answers – and in return, Le Monde’s content and brand are prioritized in the AI’s responses. The paper also will receive a cut of ad revenue generated by the AI, and as part of the collaboration, Perplexity is providing Le Monde with its own on-site chatbot (called “Sonar”) that lets Le Monde’s readers query content in a conversational way (futureweek.com). In short, Le Monde found a way to make AI work for them – securing both promotion (their content features prominently when Perplexity answers news queries) and profit-sharing from the AI’s usage.

The Le Monde-Perplexity partnership, as mentioned, is giving Le Monde a custom on-site AI answer engine (Sonar) so readers can query the news in natural language on Le Monde’s app/site (futureweek.com). Smaller websites can implement off-the-shelf solutions: there are now plugins and services that let you train a chatbot on your site’s content. A travel blog could deploy a chat assistant that instantly answers questions like “What’s the best time of year to visit Costa Rica’s Pacific coast?” using the blog’s own articles as the reference. Crucially, because the chatbot lives on the blog’s webpage, the blogger can integrate affiliate links or ads into the chat responses, maintaining a revenue stream. This turns the site into a one-stop interactive resource. Rather than losing users to ChatGPT, the blogger’s AI assistant keeps them engaged on the site, possibly even with a personality or tone that matches the brand (something a generic AI lacks).

Incorporating AI can also improve user experience in ways that increase loyalty. For instance, implementing RAG could personalize content recommendations (“Based on your question about rainforest hikes, here are 3 related posts you might like…”). It could also handle routine customer service queries or help users navigate a large archive (“Which of your past posts talk about budget travel in Costa Rica?”). All of this reduces friction for the user and mimics the convenience of a global AI – but with the advantage that it’s tailored to a specific site’s content and purpose. Essentially, independent sites can become mini-AI platforms in their own right, especially within their niche.

Some visionaries even imagine websites evolving into structured data providers for AI. By using schema markup, APIs, or knowledge graph structures, a site can present its information in a machine-readable way that ensures if an AI accesses it, the content is properly attributed and maybe even transactional (requiring a token or key). This overlaps with the earlier idea of charging for API access. We might see more sites offer paid APIs or data feeds of their content for use in AI applications, effectively packaging their info into a developer-friendly product. An example might be a health information site offering an API that AI health bots can call for the latest medically vetted explanation of a condition (with the site getting paid per call). This way, the site’s content becomes part of the AI economy with the site’s authorship and revenue share intact.

Lastly, integrating AI doesn’t only mean chatbots. It also means using AI behind the scenes to streamline operations – so that small publishers can do more with less. Many independent creators are leveraging AI tools to help write drafts, generate images, translate content, or optimize SEO. While this doesn’t directly prevent AI from stealing traffic, it does lower the cost and effort of producing high-quality content. In an environment where returns are shrinking, being more efficient is vital to survival. If a travel blogger can use AI to quickly update all their articles with the latest 2025 information (instead of spending weeks manually researching), they stay more current and valuable than competitors. AI can also help creators analyze what questions users are asking (through tools like AnswerThePublic or even ChatGPT itself) so they can target truly unmet needs that their site can answer better than anyone. The most visionary small businesses view AI as an assistant – they use it to augment their creativity and output, while focusing their human effort on the parts AI can’t do (original reporting, forming opinions, building relationships with their audience). In doing so, they can outlast less savvy bloggers who either ignore AI entirely or try to churn out mediocre AI-written content (which quickly becomes commoditized and invisible).

Conclusion: Lessons from the Orchard

In the age of AI, building a traditional coconut stand won’t cut it. And tending a beautiful orchard won’t protect you from a hungry monkey.

But if you can recognize where value is being extracted without compensation—and build a business that can either stop the monkey or sidestep it entirely—you'll thrive in ways others can't.

Not everything needs to be “AI-proof.” Just… AI-unstealable.

Protecting your work from AI isn’t just about blocking scrapers — it’s about controlling how, when, and where your content is accessed, and ensuring you’re the one delivering the value. That also means resisting the temptation to churn out generic, low-value “AI slop” in a misguided race to compete on volume. AI will always win at being AI. What it can’t win at — at least not yet — is creating the kind of original, first-hand, experience-driven content that only you can produce. Originality is your orchard; protect it, nurture it, and keep its best fruit in your own hands.

Emmet’s fence and quick thinking finally brought success to the orchard and to all other apple growing businesses that followed his lead. Emmet kept his business, customers enjoyed their apples, and even the monkey struck an agreement that provided it with a stable food source. Peace was brought to the orchard at last! And that peace lasted a good solid six months...right up until the day the monkey walked in with a toolbox and a grin, forcing Emmet to start all over again.